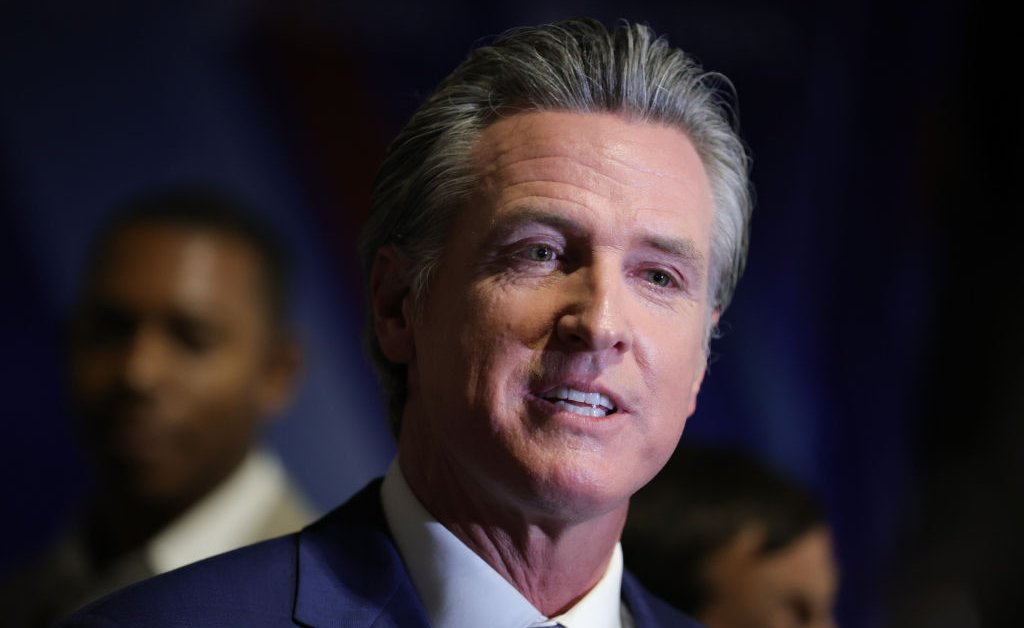

California Governor Gavin Newsom recently vetoed a bill that would have established comprehensive regulations on artificial intelligence (AI) safety, making it one of the first policies in the U.S. to hold AI developers accountable for any severe harm caused by their technologies. The bill drew criticism from prominent Democrats and tech firms like OpenAI and Andreessen Horowitz, who argued that it could impede innovation in the state. Newsom acknowledged the bill as “well-intentioned” but expressed concerns that it could apply stringent standards to even basic AI functions. He emphasized the need for regulation based on empirical evidence and science, referring to his own executive order on AI and other bills he has signed to address known risks like deepfakes.

The debate over California’s SB 1047 bill highlights the dilemma faced by lawmakers worldwide in balancing the regulation of AI risks with supporting the advancement of the technology. Despite the global boom in generative AI following the release of ChatGPT two years ago, U.S. policymakers have yet to enact comprehensive legislation governing the technology. Democratic Senator Scott Wiener, who introduced SB 1047, viewed Newsom’s veto as a setback for oversight of large corporations, stating that everyone is less safe as a result of the decision.

Had it been passed, SB 1047 would have required companies developing powerful AI models to take reasonable care to prevent severe harm such as mass casualties or property damage exceeding $500 million. Specific precautions, including maintaining a kill switch to deactivate the technology, would have been mandated, and AI models would have been subject to third-party testing to minimize grave risks. The bill aimed to establish a framework for ensuring accountability and safety in the development and deployment of AI technologies.

Newsom’s veto of SB 1047 underscores the ongoing challenge of implementing effective AI regulations that balance innovation with safety concerns. While acknowledging the importance of oversight in preventing harm from AI technologies, Newsom expressed reservations about the bill’s potential impact on basic AI functions. He emphasized the need for regulatory measures grounded in empirical evidence and science, pointing to his previous executive orders and legislation addressing specific AI risks like deepfakes.

The decision to veto SB 1047 reflects the complexity of regulating emerging technologies like AI, which hold significant promise but also pose potential risks. As lawmakers grapple with developing effective policies to govern AI, the debate surrounding California’s failed bill underscores the importance of striking a balance between promoting innovation and ensuring public safety. While the veto may have disappointed advocates of AI oversight, it also highlights the ongoing dialogue and challenges in crafting regulatory frameworks for rapidly evolving technologies.