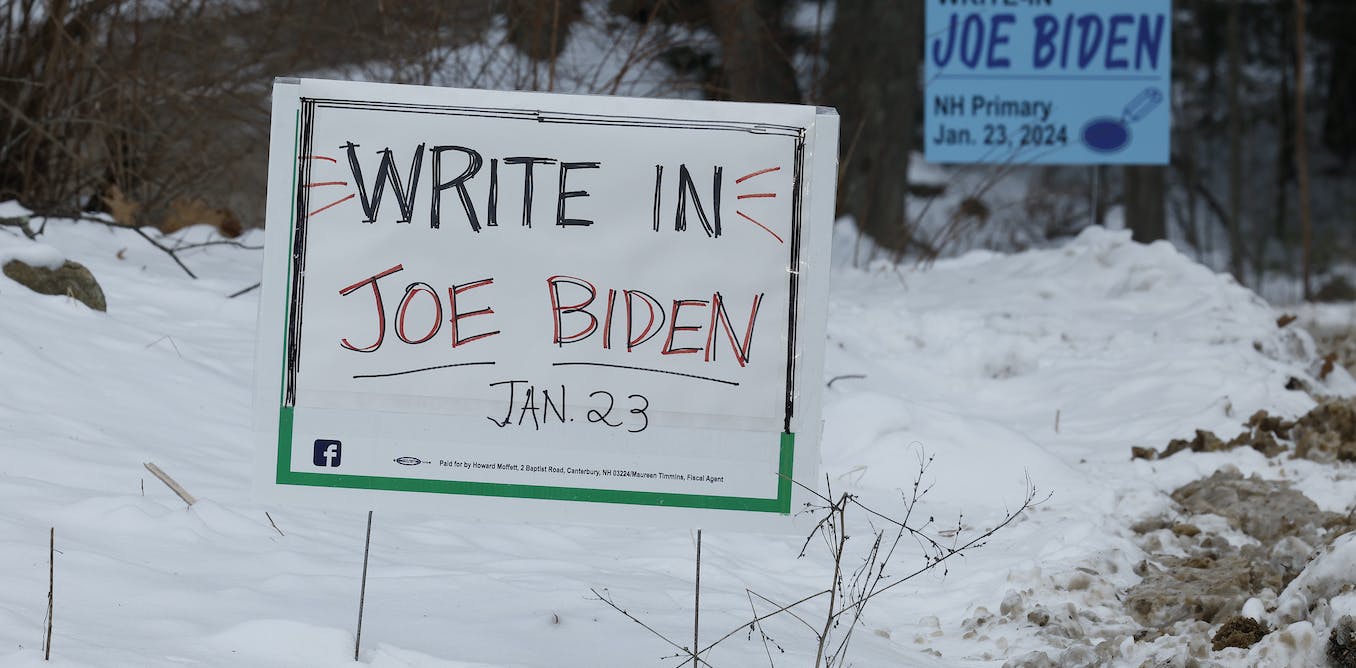

Recently, an unknown number of New Hampshire voters received a phone call that sounded like President Joe Biden urging them not to participate in the state’s GOP primary election. The call falsely implied that a registered Democrat could vote in the Republican primary and that voting in the primary would make them ineligible to vote in the general election in November. The call appears to be an artificial intelligence deepfake, and its purpose seems to be to discourage voting. The New Hampshire attorney general’s office is currently investigating the call.

Deepfake robocalls like this one are not illegal and have been used in both voter suppression campaigns and efforts to get out the vote. The use of AI to clone Biden’s voice adds another layer of complexity to the issue, as it becomes increasingly difficult to distinguish between facts and fakes in a media ecosystem full of noise. Companies offering impersonation services online have made it easy for anyone to create deepfakes of politicians, celebrities, or executives, making it even more challenging to discern the truth.

AI-enhanced disinformation campaigns are difficult to counter because unmasking the source requires tracking metadata, which is the data about a piece of media. The difficulty of research on audio and video manipulation has increased as big tech companies have shut down access to their APIs and laid off their trust and safety teams. To combat these campaigns, the public needs to remain skeptical of claims that do not come from verified sources and understand the capabilities of new audio and visual manipulation technology.

In order to protect democracy, it is crucial to design social media systems that prioritize timely, accurate, local knowledge over disruption and divisiveness. Law enforcement authorities should also investigate and prosecute those who maliciously use technology to suppress voter turnout. While deepfakes may catch people by surprise, society must not be caught off guard and should prioritize the truth over the speed of disinformation.